Overview

MCPurify is a robust middleware solution designed to sanitize Model Context Protocol (MCP) service requests, ensuring secure and reliable interactions. Intended to run as a background service, it intercepts incoming requests to MCP services, analyzes them, and returns a response containing the recommended action. This versatile crate can be easily integrated into your project as a library or used directly as a standalone executable to sanitize MCP client requests. Furthermore, at its core, MCPurify also features a simple forwarding proxy that enables connections with external AI models that have tool support, allowing these models to leverage tools as part of their request processing workflow. By providing access to these AI models through its sanitization and forwarding capabilities, MCPurify facilitates the use of advanced tool-enabled AI functionalities within MCP-based systems.

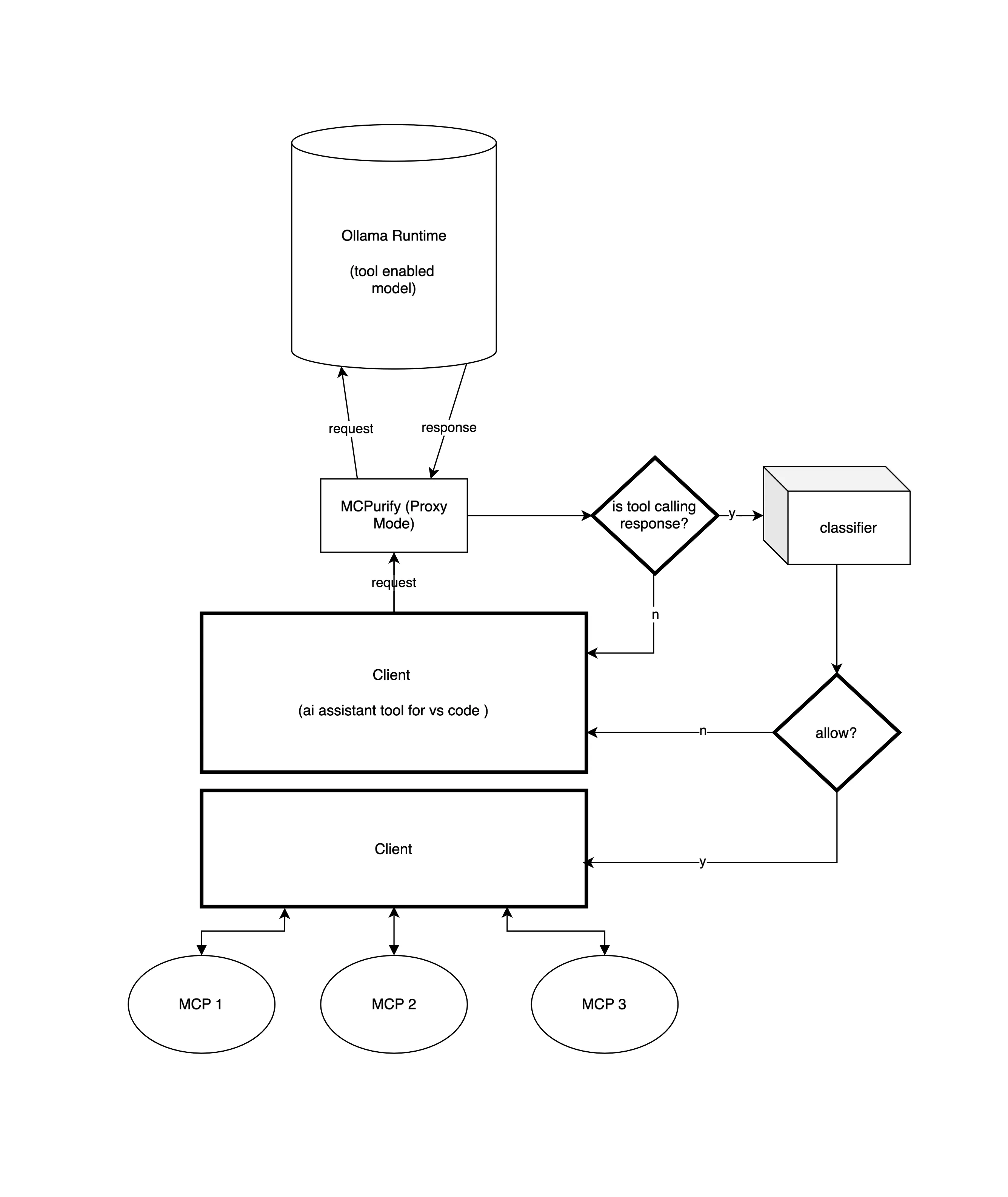

Architecture

The diagram illustrates the operational principle of MCPurify. In this setup, MCPurify acts as a forwarding proxy, capable of handling HTTP and HTTPS traffic to supported runtimes such as Ollama, vLLM, or AI providers that allow tool calls like Claude, OpenAI’s ChatGPT, and Microsoft Copilot. The client informs the model of available tools via the Model Context Protocol (MCP). When the model requires the output of a tool, MCPurify forwards the request to a classifier model, which has been trained to detect common attack vectors on MCP services. The classifier then either allows or denies the tool call, informing the client of the result. If the tool request is allowed, the client uses the approved request to invoke the corresponding MCP service. The classifier model is open-source and included in the MCPurify release, ensuring transparency and security in the decision-making process.